GPU Rankings Top 5 GPUs Leading the Tech Frontier

Graphics processing units (GPUs) are pivotal, powering AI, high-performance computing, data centers, and visualization. This article ranks the top 5 Gpus, analyzing their technical strengths and applications for tech enthusiasts.

GPU Market Overview: Innovation Powers Diverse Applications

The rise of AI, machine learning (ML), big data analytics, cloud gaming, and edge computing has expanded GPU applications far beyond traditional graphics rendering. GPUs now underpin high-performance computing (HPc), AI training and inference, and real-time data processing. Data centers rely on powerful GPUs to handle complex model training, professional workstations depend on them for 3D modeling and real-time rendering, and edge devices demand low-power, high-efficiency GPUs.

Against this backdrop, industry leaders like NVIDIA, AMD, and Intel have introduced next-generation GPUs through architectural advancements, cutting-edge manufacturing processes, and robust software ecosystems. This top 5 GPU ranking, based on performance, efficiency, compatibility, and market feedback, offers a clear reference for those seeking the best in GPU technology.

Top 5 GPU Rankings

1.NVIDIA H100 Tensor Core GPU

The NVIDIA H100, built on the Hopper architecture, leverages a 4nm process and integrates 80 billion transistors. Supporting FP8 precision computing, it delivers up to 3.3 PFLOPS of AI performance. Equipped with HBM3 memory and a 3TB/s bandwidth, it’s tailored for large-scale AI workloads. Its Multi-Instance GPU (MIG) technology allows a single card to be partitioned into multiple isolated instances, adapting flexibly to diverse tasks.

The H100 excels in AI research, deep learning, and natural language processing (NLP), making it ideal for generative AI applications like large language models. A major cloud platform reported that the H100 accelerated complex AI model training by 2.5 times compared to the previous-generation A100, significantly reducing development timelines. Paired with the NVIDIA AI Enterprise software suite, the H100 streamlines AI development, offering robust support for cutting-edge applications.

2. AMD Instinct MI300X

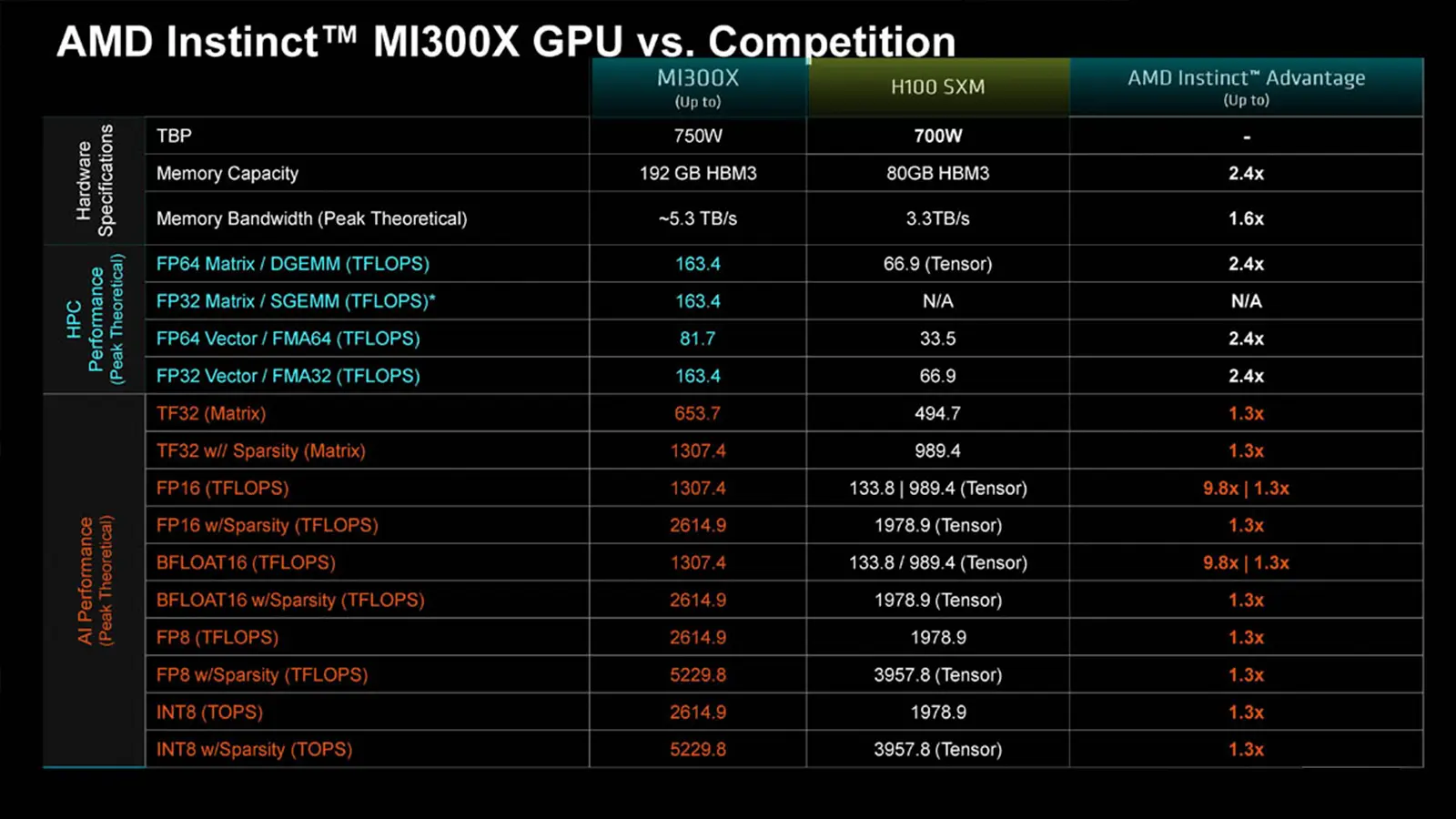

The AMD Instinct MI300X, based on the CDNA 3 architecture, integrates 153 billion transistors and features 141GB of HBM3 memory, delivering 2.6 PFLOPS of FP16 performance. Its Chiplet-based modular design enhances yield and scalability, striking an optimal balance between performance and cost. The open-source ROCm software platform provides developers with a flexible programming environment.

The MI300X shines in high-performance computing and AI workloads, serving supercomputing centers, scientific simulations, and financial modeling. A European supercomputing center noted that the MI300X matched the performance of pricier competitors in molecular dynamics simulations while reducing power consumption by approximately 15%, highlighting its exceptional energy efficiency.

3. NVIDIA A100 80GB

Built on the Ampere architecture, the NVIDIA A100 80GB boasts 80GB of HBM3 memory with a 2TB/s bandwidth and supports FP64 Tensor Core computing. Its NVLink 3.0 technology enables efficient multi-GPU interconnectivity, making it suitable for diverse workloads. The A100 is widely used in AI inference, data analytics, and professional visualization.

With a mature software ecosystem, the A100 is a reliable choice. A technology firm reported a 40% boost in real-time data processing speeds using the A100, significantly enhancing operational efficiency. Its compatibility and extensive application support make it a versatile option across multiple scenarios.

4. Intel Data Center GPU Max 1550

The Intel Max 1550, based on the Xe-HPC architecture, uses a 7nm process and integrates 100 billion transistors. Supporting FP64 computing, it offers 56 Xe cores. Its high-density Tile design optimizes power efficiency, with single-card consumption capped at 600W. The oneAPI software ecosystem seamlessly integrates Intel CPUs and GPUs, boosting development productivity.

The Max 1550 excels in high-performance computing and cloud rendering, catering to weather simulations, genomics, and film post-production. An animation studio reported that the Max 1550 improved 4K rendering speeds by 30% compared to its predecessor, shortening project timelines significantly.

5. AMD Radeon Pro W7900

The AMD Radeon Pro W7900, based on the RDNA 3 architecture, features 48GB of GDDR6 memory, supports AV1 encoding/decoding, and delivers 61 TFLOPS of single-precision performance. With a 20% improvement in power efficiency over its predecessor, it’s designed for professional workstations. The W7900 is optimized for CAD design, 3D modeling, and real-time rendering, serving architecture, automotive design, and game development.

Known for its cost-effectiveness and certifications with professional software like Autodesk and Adobe, the W7900 is a strong contender. A design team noted that the W7900 maintained stability in multi-task rendering, reducing project delivery times by about 20%. Its multi-display support (up to 8K resolution) meets complex visualization needs.

Key Considerations for Choosing a GPU

Performance and Application Fit

Selecting a GPU requires aligning its capabilities with specific use cases. AI training demands high FP8/FP16 performance (e.g., H100, MI300X), while rendering prioritizes memory capacity and display output (e.g., W7900). Matching performance to needs maximizes efficiency.

Software Ecosystem and Compatibility

The software ecosystems—NVIDIA’s CUDA, AMD’s ROCm, and Intel’s oneAPI—impact development efficiency. Choosing a GPU compatible with existing tech stacks reduces integration challenges and accelerates project deployment.

Total Cost of Ownership (TCO)

Consider procurement costs, power consumption, and maintenance. The MI300X and W7900 offer strong value, while the H100 and A100 cater to those prioritizing peak performance.

Scalability and Future-Proofing

The H100’s MIG technology and A100’s NVLink support provide flexibility for future expansion, ideal for long-term planning.

Market Trends and Future Outlook

The GPU market reflects the following trends:

- Surging AI Demand:The proliferation of generative AI and large models fuels demand for high-performance GPUs, with the H100 and MI300X leading the charge.

- Focus on Efficiency: Rising energy costs spotlight low-power GPUs like the Max 1550, supporting sustainability goals.

- Open-Source Ecosystems: AMD’s ROCm and Intel’s oneAPI offer developers alternatives, challenging NVIDIA’s CUDA dominance.

- Edge Computing Growth: Low-power, high-efficiency GPUs are gaining traction in edge AI and IoT applications.

Looking ahead, advancements in sub-5nm processes and early quantum computing applications will further boost GPU performance. Staying informed about technological roadmaps will aid in strategic planning.

Conclusion

The GPU rankings highlight the pinnacle of GPU innovation: the NVIDIA H100 and MI300X dominate AI and high-performance computing, the A100 and Max 1550 offer reliability across diverse applications, and the W7900 delivers value for professional visualization. When selecting a GPU, consider performance, cost, ecosystem, and scalability to align with specific needs. Through informed choices, GPUs will continue to drive technological breakthroughs and efficiency gains.